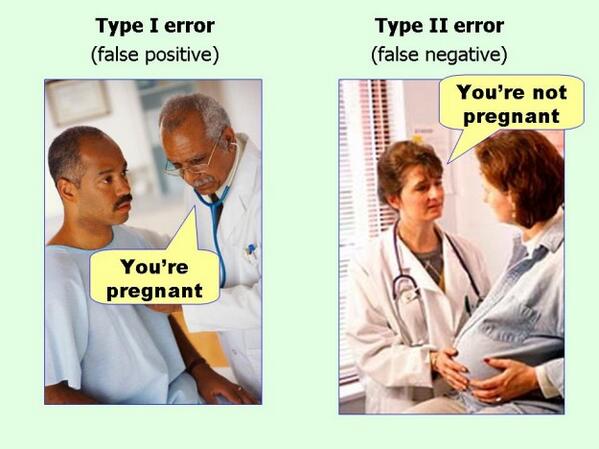

class: center, middle, inverse, title-slide .title[ # Reading Results Tables ] .subtitle[ ## GHM (2010): Tables ] .author[ ### Hussain Hadah (he/him) ] .date[ ### 06 February 2025 ] --- layout: true <div style="position: absolute;left:20px;bottom:5px;color:black;font-size: 12px;">Hussain Hadah (he/him) (Tulane) | GHM (2010): Tables | 06 February 2025</div> <!--- GHM (2010): Tables | 06 February 2025--> <style type="text/css"> /* Table width = 100% max-width */ .remark-slide table{ width: auto !important; /* Adjusts table width */ } /* Change the background color to white for shaded rows (even rows) */ .remark-slide thead, .remark-slide tr:nth-child(2n) { background-color: white; } .remark-slide thead, .remark-slide tr:nth-child(n) { background-color: white; } </style> --- class: title-slide background-image: url("assets/TulaneLogo-white.svg"), url("assets/title-image1.jpg") background-position: 10% 90%, 100% 50% background-size: 160px, 50% 100% background-color: #0148A4 # .text-shadow[.white[Outline for Slides]] <ol> <li><h4 class="white">General layout of statistical results tables</h4></li> <li><h5 class="white">Coefficients and standard errors – what they mean</h5></li> <li><h5 class="white">Constructing confidence intervals: 90%, 95%, and 99%</h5></li> <li><h5 class="white">Hypothesis testing: 10%, 5%, and 1% levels</h5></li> <li><h5 class="white">What do the *s beside the estimates mean?</h5></li> </ol> --- ## Quiz <svg viewBox="0 0 448 512" style="height:1em;display:inline-block;position:fixed;top:10;right:10;" xmlns="http://www.w3.org/2000/svg"> <path d="M0 464c0 26.5 21.5 48 48 48h352c26.5 0 48-21.5 48-48V192H0v272zm64-192c0-8.8 7.2-16 16-16h288c8.8 0 16 7.2 16 16v64c0 8.8-7.2 16-16 16H80c-8.8 0-16-7.2-16-16v-64zM400 64h-48V16c0-8.8-7.2-16-16-16h-32c-8.8 0-16 7.2-16 16v48H160V16c0-8.8-7.2-16-16-16h-32c-8.8 0-16 7.2-16 16v48H48C21.5 64 0 85.5 0 112v48h448v-48c0-26.5-21.5-48-48-48z"></path></svg> ### The quiz in on Thursday -- #### Quiz Topics - Geographical Definitions & Scavenger Hunt - Finding data on data.census.gov - Agglomeration, Clusters, and Cities - Practice Jigsaw - Economic Clusters - Greenstone, Hornbeck, & Moretti (2010) + Intro. to Diff-in-Diff - Reading Results Tables --- ## Q&A on Quiz Content -- - **Question:** How could the content from the cluster jigsaw appear on a quiz? - **Answer:** I will not ask you to provide anything detailed about any specific paper. --- ## Quiz timing, content, and logistics - The quiz will be 75 minutes long - Three short answer questions - Possibly one or two multiple choice questions - Start at 11:00 am and you will need to submit the quiz by 12:15 pm unless you have extra time (via Goldman) - It will be conducted on Canvas (so you will need a computer). - It is an open book quiz but you cannot work with anyone or communicate in any way with anyone. - No need for lockdown, zoom, or any other software - You do not need to take the quiz in the classroom. That is optional. - Please ask me any questions! If we are both in the room then come up and talk to me. Otherwise email me at hhadah@tulane.edu. I will monitor my email consistently. --- ## Next Week <svg viewBox="0 0 448 512" style="height:1em;display:inline-block;position:fixed;top:10;right:10;" xmlns="http://www.w3.org/2000/svg"> <path d="M0 464c0 26.5 21.5 48 48 48h352c26.5 0 48-21.5 48-48V192H0v272zm64-192c0-8.8 7.2-16 16-16h288c8.8 0 16 7.2 16 16v64c0 8.8-7.2 16-16 16H80c-8.8 0-16-7.2-16-16v-64zM400 64h-48V16c0-8.8-7.2-16-16-16h-32c-8.8 0-16 7.2-16 16v48H160V16c0-8.8-7.2-16-16-16h-32c-8.8 0-16 7.2-16 16v48H48C21.5 64 0 85.5 0 112v48h448v-48c0-26.5-21.5-48-48-48z"></path></svg> 1. Economic Incentives Briefing Note 2. Quiz on Thursday Feb 13th -- ### Readings <svg viewBox="0 0 576 512" style="height:1em;position:relative;display:inline-block;top:.1em;" xmlns="http://www.w3.org/2000/svg"> <path d="M542.22 32.05c-54.8 3.11-163.72 14.43-230.96 55.59-4.64 2.84-7.27 7.89-7.27 13.17v363.87c0 11.55 12.63 18.85 23.28 13.49 69.18-34.82 169.23-44.32 218.7-46.92 16.89-.89 30.02-14.43 30.02-30.66V62.75c.01-17.71-15.35-31.74-33.77-30.7zM264.73 87.64C197.5 46.48 88.58 35.17 33.78 32.05 15.36 31.01 0 45.04 0 62.75V400.6c0 16.24 13.13 29.78 30.02 30.66 49.49 2.6 149.59 12.11 218.77 46.95 10.62 5.35 23.21-1.94 23.21-13.46V100.63c0-5.29-2.62-10.14-7.27-12.99z"></path></svg> 3. .small[Briefing note readings:] 1. .small[Last Names A to D -> Neumark, Kolko - 2010] 2. .small[Last Names E to G -> Button - 2019] 3. .small[Last Names H to J -> Holmes - 1998] 4. .small[Last Names K to L -> Coates and Humphreys - The Stadium Gambit] 5. .small[Last Names M to O -> Moretti, Wilson - 2014] 6. .small[Last Names P to S -> Strauss-Kahn, Vives - 2009] 7. .small[Last Names T to Z -> Lee - 2008] --- ## General layout of statistical results tables .pull-left[ - Top numbers are the estimates. - Usually these are coefficient estimates from a regression, but sometimes they are just means or differences in means. - These tell you the estimated effect, difference, etc. - These tell you the magnitude of the effect or difference – was it small or large? Negative or positive? ] .pull-right[ <img src="02-class2_files/figure-html/table1-1.png" width="200%" style="display: block; margin: auto;" /> ] --- ## General layout of statistical results tables .pull-left[ - Under each estimate, in (), is the standard error (SE) of the estimate. - The SE tells us how precise the estimate is. How sure are we of this estimate? - Larger SE = less precise estimate, the estimate has a larger margin of error. - A confidence interval for this estimate would be wider (as we shall see). ] .pull-right[ <img src="02-class2_files/figure-html/table1-1-1.png" width="200%" style="display: block; margin: auto;" /> ] --- ## More tables .pull-left[ - I will explain more about all these tables later, but for not just notice the typical format: estimate with standard errors underneath it. Also notice the use of *s, which I will explain shortly. These indicate how statistically significant an estimate is. <img src="02-class2_files/figure-html/table3-1.png" width="100%" style="display: block; margin: auto;" /> ] .pull-right[ <img src="02-class2_files/figure-html/table2-1.png" width="100%" style="display: block; margin: auto;" /> ] --- ## Coefficients and standard errors – what they mean - Estimate (top number) tell us the effect that was estimated and what the magnitude of the effect was. - The standard error tells us how precise that estimate is (how much margin of error does it have?) - Here are some examples of coefficients that may make this easier to understand for those of you who haven’t taken econometrics or any statistics courses that use regression. --- ## Coefficients and standard errors – what they mean - Suppose I estimated the mean (average) productivity of firms in county A and in county B. These are hypothetical numbers. - County A = 100, with a standard error of 10. - County B = 90, with a standard error of 12. - The difference (A – B) is 10, and suppose it has a standard error of 15. - Let’s focus on this estimate of 10, with a standard error of 15. - The estimate of 10 tells us that county A’s productivity is estimated to be 10 higher than county B’s productivity, on average. - The standard error is 15, which is fairly high. - How do we use this standard error to tell us how precise our estimate is? - The best way to do it is by using it to construct a confidence interval. --- ## Constructing confidence intervals - There are usually three confidence intervals that (social) scientists create: 90%, 95%, and 99% confidence intervals. - The intuitive<sup>*</sup> way to understand these is: - The 90% (95%, 99%) confidence interval tells us that, under the assumption that our statistical model is correct, the true effect we are measuring lies within our confidence interval 90% (95%, 99%) of the time. - For example, suppose the 95% confidence interval of an estimate was (-0.3 to 0.1). Then we are 95% confident that the true effect, the thing we are estimating, lies between -0.3 and 0.1. - Thus, this confidence intervals tell us how sure we are of our estimates, since it’s impossible to be sure what they are exactly, given randomness and noise in the data.<sup>1</sup> .pull-right[.footnote[<sup>*</sup> For those with more theoretical stats training, you’ll know that this intuitive explanation isn’t technically correct, but I am not looking to explain to beginners the difference between frequentist and Bayesian statistics or the repeated sampling nature of classical statistics.] ] --- ## Constructing confidence intervals - How do we make 90%, 95%, and 99% confidence intervals? - The general formula is: - Lower bound: Estimate – critical value * standard error - Upper bound: Estimate + critical value * standard error - Where the critical value is 1.645 for a 90% interval, 1.96 for 95%, and 2.576 for 99%. - Going back to our original example, we had an estimate of 10 and a standard error of 15 - Lower bound: 10 – critical value * 15 - Upper bound: 10 + critical value * 15 - Where the critical value is 1.645 for a 90% interval, 1.96 for 95%, and 2.576 for 99%. - Lower bound: 10 – critical value * 15 = 10 – 1.645*15 = 10 – 24.675 = -14.675 - Upper bound: 10 + critical value * 15 = 10 + 1.645*15 = 10 + 24.675 = 34.675 - Therefore, the 90% confidence interval is (-14.675, 34.675). --- ## Constructing confidence intervals – 90% - Lower bound: 10 – critical value * 15 = 10 – 1.645*15 = 10 – 24.675 = -14.675 - Upper bound: 10 + critical value * 15 = 10 + 1.645*15 = 10 + 24.675 = 34.675 - Therefore, the 90% confidence interval is (-14.675, 34.675). --- ## Constructing confidence intervals – 95% - Lower bound: 10 – critical value * 15 = 10 – 1.96*15 = 10 – 29.4 = -19.4 - Upper bound: 10 + critical value * 15 = 10 + 1.96*15 = 10 + 29.4 = 39.4 - Therefore, the 95% confidence interval is (-19.4, 39.4). --- ## Constructing confidence intervals – 95% .pull-left[ <img src="02-class2_files/figure-html/hot-tip-1.png" width="100%" style="display: block; margin: auto;" /> ] .pull-right[ - Lower bound: 10 – critical value * 15 = 10 – 1.96*15 = 10 – 29.4 = -19.4 - Upper bound: 10 + critical value * 15 = 10 + 1.96*15 = 10 + 29.4 = 39.4 - Therefore, the 95% confidence interval is (-19.4, 39.4). - **1.96 is very close to 2**, so you can calculate an “eye-ball” confidence interval (not a technical term) by using 2: - Lower = 10 – 2*15 = 10 – 30 = -20 - Upper = 10 + 2*15 = 10 + 30 = 40 ] --- ## Constructing confidence intervals – 99% - Lower bound: 10 – critical value * 15 = 10 – 2.576*15 = 10 – 38.64 = -28.64 - Upper bound: 10 + critical value * 15 = 10 + 2.576*15 = 10 + 38.64 = 48.64 - Therefore, the 99% confidence interval is (-28.64, 48.64). --- ## Comparing confidence intervals - For our example of an estimate of 10, with a standard error of 15, our confidence intervals are: - The 90% confidence interval is (-14.675, 34.675). - The 95% confidence interval is (-19.4, 39.4). - The 99% confidence interval is (-28.64, 48.64). - Notice how as we move higher in confidence, the confidence interval grows. - To be more sure that our interval contains the true value (higher % confidence), we have to increase the interval. --- class: segue-yellow background-image: url("assets/TulaneLogo.svg") background-size: 20% background-position: 95% 95% # Confidence Intervals Examples --- ## Example of confidence intervals <img src="02-class2_files/figure-html/unnamed-chunk-3-1.png" width="80%" style="display: block; margin: auto;" /> --- class: segue-yellow background-image: url("assets/TulaneLogo.svg") background-size: 20% background-position: 95% 95% # Confidence Intervals Activity --- ## After Class Activity – Calculating confidence intervals <svg viewBox="0 0 448 512" style="height:1em;display:inline-block;position:fixed;top:10;right:10;" xmlns="http://www.w3.org/2000/svg"> <path d="M144 479H48c-26.5 0-48-21.5-48-48V79c0-26.5 21.5-48 48-48h96c26.5 0 48 21.5 48 48v352c0 26.5-21.5 48-48 48zm304-48V79c0-26.5-21.5-48-48-48h-96c-26.5 0-48 21.5-48 48v352c0 26.5 21.5 48 48 48h96c26.5 0 48-21.5 48-48z"></path></svg> - For this, I’m going to have you calculate “eye-ball” 95% confidence intervals, i.e. using 2 instead of 1.96 for the critical value. - Therefore, the formula is: - Lower bound = estimate – 2*SE, Upper bound = estimate + 2*SE - Remember order of operations -> multiply SE by 2 first! - The short quiz “Confidence Interval Calculation” on Canvas. --- class: segue-yellow background-image: url("assets/TulaneLogo.svg") background-size: 20% background-position: 95% 95% # Hypothesis Testing --- ## Hypothesis testing - In addition to calculating confidence intervals, we often do hypothesis testing. - Mostly, we test to see if our estimates are statistically significantly different from zero. - This is, are we reasonably sure that the true value, which we estimated, is different from zero? - Different from zero is useful to test because if it is different from zero, then it implies that there is likely an effect or a difference. - If an estimate is not statistically significantly different from zero, we don’t have enough statistical evidence to claim that there is an effect. --- ## Hypothesis testing: 10%, 5%, and 1% levels - We typically test for statistical significance at the following levels: - 10%, which corresponds to a 90% confidence interval, - 5%, which corresponds to a 95% confidence interval, - 1%, which corresponds to a 99% confidence interval. - The 10%, 5%, and 1% here refer to the amount of what is called “Type 1 error”, which can be interpreted as a false positive rate (finding an effect that does not exist). - Under 10% (5%, 1%), you will find an effect (difference from zero) that does not actually exist 10% (5%, 1%) of the time. --- ## Balancing Type 1 and Type 2 Error - Statistics tries to balance to types of error: - Type 1 error -> “false positive” - E.g., finding an effect where there is actually no effect. - A positive test result when really the person is negative. - Type 2 error -> “false negative” - E.g., finding no effect (not statistically different from zero) when really there is an effect. - A negative test result when really the person is positive. --- ## Balancing Type 1 and Type 2 Error <img src="02-class2_files/figure-html/errors-and-balance-3.png" width="50%" style="display: block; margin: auto;" /> If we decrease the level that we test at (e.g., from 5% to 1%, which would be the same as moving from a 95% confidence interval to a 99% confidence interval) then we decrease the probability of making Type 1 Errors (fewer false positives) but we increase the probability of making Type 2 Errors (more false negatives). --- .center[] --- ## Which Type of error is this concept minimizing? > It is better that ten guilty persons escape than that one innocent suffer. - William Blackstone -- - In the context of the criminal justice system - Type I error occurs when an innocent person is wrongfully convicted - Type II error, or "false negative," occurs when a guilty person is wrongfully acquitted --- ## Hypothesis testing formula - To do a hypothesis test, at any level (10%, 5%, 1%), to see if our estimate is statistically different from zero, we first calculate a t-statistic as follows: $$ t = \frac{\text{estimate} - 0}{\text{SE}} $$ - The formula for hypothesis testing is: - Test statistic = (estimate – null hypothesis value) / standard error - The null hypothesis value is usually zero, but it can be any value. - The standard error is the standard error of the estimate. -- - E.g., if the coefficient is 0.2 and the standard error is 0.1, the t-statistic is 2. - E.g., if the coefficient is -2 and the standard error is 2, the t-statistic is -1. --- ## Hypothesis testing formula - Once we have our t-statistic, we compare it to a critical value. - These are the same critical values used to create confidence intervals. - The critical values are… - 1.645 for a test at the 10% level of significance (90% confidence interval) - 1.96 for a test at the 5% level of significance (95% confidence interval) - 2.576 for a test at the 1% level of significance (99% confidence interval) - If our critical value is, in **absolute value**, greater than that critical value, then it is at least significant at that level. - `\(|t-statistic| \ge \text{critical value}\)` - The | | means “take the absolute value of” - So, if your t-statistic is negative (i.e. your estimate is negative), then just multiply it by -1 to make it positive. --- ## Hypothesis testing Example - 1.645 for a test at the 10% level of significance - 1.96 for a test at the 5% level of significance - 2.576 for a test at the 1% level of significance - `\(|t-statistic| \ge \text{critical value}\)` - Suppose our t-statistic is 2.2. - It’s greater in absolute value than 1.645 and 1.96, but not 2.576. - Therefore it is significant at the 5% level, but not the 1% level. - Suppose our t-statistic is -1.7. - It’s greater in absolute value than 1.645, but not 1.96 or 2.576. - Therefore it is significant at the 10% level, but not the 5% or 1% levels. --- ## Hypothesis testing Example .pull-left[ - Instead of using 1.96 as the critical value to test at the 5% level, use 2. - The “eye-ball” t-test at the 5% level is just dividing the coefficient by the standard error and seeing if that t-statistic is greater than 2 in absolute value. - You can often do this just by looking at coefficient estimates with their standard errors in tables. - E.g., 0.038 (0.017) - I can see that that’s bigger than 2. ] .pull-right[ <img src="02-class2_files/figure-html/hot-tip2-1.png" width="100%" style="display: block; margin: auto;" /> ] --- ## After Class Activity – t-statistics and hypothesis testing <svg viewBox="0 0 448 512" style="height:1em;display:inline-block;position:fixed;top:10;right:10;" xmlns="http://www.w3.org/2000/svg"> <path d="M144 479H48c-26.5 0-48-21.5-48-48V79c0-26.5 21.5-48 48-48h96c26.5 0 48 21.5 48 48v352c0 26.5-21.5 48-48 48zm304-48V79c0-26.5-21.5-48-48-48h-96c-26.5 0-48 21.5-48 48v352c0 26.5 21.5 48 48 48h96c26.5 0 48-21.5 48-48z"></path></svg> - Let’s take a break from lecture to calculate some intervals. - For this, I’m going to have you do hypothesis tests (“t-tests”) using the “eye-ball” method, i.e. using 2 instead of 1.96 for the critical value. - Therefore, the formula is: - `\(|t-statistic| \ge 2\)` --- ## What do the *s beside the estimates in tables mean? .pull-left[ - Usually statistical tables have notes under them that detail what *, **, *** - More *s means more statistically significant -> we are even more sure that there is an effect (i.e. that the estimate is different). The risk of Type 1 error (false positive) is lower as significance increases. <img src="02-class2_files/figure-html/table3-2-1.png" width="90%" style="display: block; margin: auto;" /> ] .pull-right[ <img src="assets/table-2.png" alt="image" width="70%" height="auto"> ] --- ## What do the *s beside the estimates in tables mean? - Most tables use the following convention, but check the table notes to be sure. - No *s means not statistically significant at the 10% level (or any more stringent levels: 5%, 1%, etc.). - * means statistically significantly different from zero at the 10% level. - Or, zero does not fall into the 90% confidence interval - ** means statistically significantly different from zero at the 5% level. - Or, zero does not fall into the 95% confidence interval - *** means statistically significantly different from zero at the 1% level. - Or, zero does not fall into the 99% confidence interval --- ## What do the *s beside the estimates in tables mean? - Note: anything significant at the 1% level (***) is also significant at the 5% level (**) and the 10% level (*). - Similarly, anything significant at the 5% level (**) is also significant at the 10% level (*). - Testing at the 5% level is the most common benchmark of statistical significance used. - So, when researchers say something is statistically significant, they usually mean that it’s statistically significant at at least the 5% level. - The 1% level is the strongest conventional level, although you can test at any level (e.g., some researchers test at the 0.1% level). --- ## What do the *s beside the estimates in tables mean? - You can use the * system to quickly see how significant estimates are. - This avoids you having to do more time-intensive ways at gauging the statistical significant of the estimates, such as: - Calculating a t-statistic (coefficient divided by standard error) and seeing if it’s greater than two (which would mean its significant at the 5% level). - Calculating a confidence interval. - Again, just be sure to check the table notes to be sure you are interpreting the * system correctly. --- ## Other table conventions – t-stats in () - The majority of social sciences, outside of usually psychology, tend to present statistical results the way I detailed here: - Estimate, with standard errors in () underneath - However, some fields or older papers put **t-statistics** underneath the estimates. .pull-left[ - So instead of 2.0 (1.0) ] .pull-right[ - They would have: 2.0 (2.0) ] - Check the table notes so you know what is in the ()! --- ## Other table conventions – p-value in () - The majority of social sciences, outside of usually psychology, tend to present statistical results the way I detailed here: - Estimate, with standard errors in () underneath - However, some fields or older papers put p-values underneath the estimates. .pull-left[ - So instead of 1.96 (1.0) ] .pull-right[ - They would have: 1.96 (0.05) or 1.96 `\([0.05]\)` ] - The p-value is significance level, so 0.05 means significant at the 5% level. - A p-value of 0.01 means significant at the 1% level, etc. --- class: segue-yellow background-image: url("assets/TulaneLogo.svg") background-size: 20% background-position: 95% 95% # Difference-in-differences R Code: Optional <svg viewBox="0 0 640 512" style="height:1em;display:inline-block;position:fixed;top:10;right:10;" xmlns="http://www.w3.org/2000/svg"> <path d="M278.9 511.5l-61-17.7c-6.4-1.8-10-8.5-8.2-14.9L346.2 8.7c1.8-6.4 8.5-10 14.9-8.2l61 17.7c6.4 1.8 10 8.5 8.2 14.9L293.8 503.3c-1.9 6.4-8.5 10.1-14.9 8.2zm-114-112.2l43.5-46.4c4.6-4.9 4.3-12.7-.8-17.2L117 256l90.6-79.7c5.1-4.5 5.5-12.3.8-17.2l-43.5-46.4c-4.5-4.8-12.1-5.1-17-.5L3.8 247.2c-5.1 4.7-5.1 12.8 0 17.5l144.1 135.1c4.9 4.6 12.5 4.4 17-.5zm327.2.6l144.1-135.1c5.1-4.7 5.1-12.8 0-17.5L492.1 112.1c-4.8-4.5-12.4-4.3-17 .5L431.6 159c-4.6 4.9-4.3 12.7.8 17.2L523 256l-90.6 79.7c-5.1 4.5-5.5 12.3-.8 17.2l43.5 46.4c4.5 4.9 12.1 5.1 17 .6z"></path></svg> --- ## Difference-in-differences R Code - This tutorial is base on David Card and Alan B. Krueger (1994) "Minimum Wages and Employment: A Case Study of the Fast-Food Industry in New Jersey and Pennsylvania" *American Economic Review* 84(4): 772-793. --- ## Difference-in-differences R Code - First, we need to load the data and install the packages we will use. - The dataset could be downloaded from [here](https://raw.githubusercontent.com/hhadah/urban-econ/main/slides/03-week3/02-thur/assets/minwage_short.csv) ``` r # Load the data library(haven) library(tidyverse) library(dplyr) library(readr) library(broom) urlfile = "https://raw.githubusercontent.com/hhadah/urban-econ/main/slides/03-week3/02-thur/assets/minwage_short.csv" # Load the data data <- read_csv(url(urlfile)) names(data) ``` ``` ## [1] "state" "empft1" "emppt1" "nmgrs1" ## [5] "empft2" "emppt2" "nmgrs2" "store_id" ## [9] "emptot1" "emptot2" "store_has_data" ``` --- ## Difference-in-differences R Code - Filter missing data ``` r min_wage_data <- data %>% filter(!(is.na(emptot1)), !(is.na(emptot2))) names(min_wage_data) ``` ``` ## [1] "state" "empft1" "emppt1" "nmgrs1" ## [5] "empft2" "emppt2" "nmgrs2" "store_id" ## [9] "emptot1" "emptot2" "store_has_data" ``` - Create a new variable `treat` to indicate the treatment group ``` r min_wage_data <- min_wage_data %>% mutate(nj = state == 1) ``` --- ## Do Difference-in-differences by hand ``` r nj_before <- min_wage_data %>% filter(nj == 1) %>% summarise(mean_empt1 = mean(emptot1), sd_empt1 = sd(emptot1)) nj_after <- min_wage_data %>% filter(nj == 0) %>% summarise(mean_empt1 = mean(emptot1), sd_empt1 = sd(emptot1)) pa_before <- min_wage_data %>% filter(nj == 1) %>% summarise(mean_empt2 = mean(emptot2), sd_empt2 = sd(emptot2)) pa_after <- min_wage_data %>% filter(nj == 0) %>% summarise(mean_empt2 = mean(emptot2), sd_empt2 = sd(emptot2)) ``` --- ## Do Difference-in-differences by hand .pull-left[ ``` r nj_after ``` ``` ## # A tibble: 1 x 2 ## mean_empt1 sd_empt1 ## <dbl> <dbl> ## 1 23.4 12.0 ``` ``` r nj_before ``` ``` ## # A tibble: 1 x 2 ## mean_empt1 sd_empt1 ## <dbl> <dbl> ## 1 20.4 9.21 ``` ] .pull-right[ ``` r pa_after ``` ``` ## # A tibble: 1 x 2 ## mean_empt2 sd_empt2 ## <dbl> <dbl> ## 1 21.1 8.38 ``` ``` r pa_before ``` ``` ## # A tibble: 1 x 2 ## mean_empt2 sd_empt2 ## <dbl> <dbl> ## 1 20.9 9.38 ``` ] ``` r nj_after - nj_before - (pa_after - pa_before) ``` ``` ## mean_empt1 sd_empt1 ## 1 2.75 3.803228 ``` --- ## Do Difference-in-differences by hand ### Now, change over time (after - before) ``` r min_wage_data <- min_wage_data %>% mutate(demp = (emptot2 - emptot1)) summary_demp <- min_wage_data %>% group_by(state) %>% summarise(mean = mean(demp), sd = sd(demp)) summary_demp ``` ``` ## # A tibble: 2 x 3 ## state mean sd ## <dbl> <dbl> <dbl> ## 1 0 -2.28 10.9 ## 2 1 0.467 8.45 ``` --- ## Difference-in-differences in regression form ``` r DD_Regression <- lm(demp ~ nj, data = min_wage_data) tidy(DD_Regression) ``` ``` ## # A tibble: 2 x 5 ## term estimate std.error statistic p.value ## <chr> <dbl> <dbl> <dbl> <dbl> ## 1 (Intercept) -2.28 1.04 -2.21 0.0280 ## 2 njTRUE 2.75 1.15 2.38 0.0177 ```